Tickets

When

Thu 6 Nov 2025

6pm

Three leading experts – spanning conflict reporting, environmental disasters and politics – dissect the evolving challenges of trust, credibility and media integrity in perilous times.

Artificial intelligence (AI) is transforming the way stories are told, how news is reported and content created. Once requiring extensive resources and skills, AI voice and deepfake technologies are now widely accessible, enabling creators and media professionals to push boundaries in storytelling and communication.

From reviving historical figures to breaking language barriers, AI opens exciting possibilities. Yet, the emergence of hyper-realistic synthetic media raises questions about verification, authenticity and ethical reporting.

With voice clones convincingly mimicking real individuals and deepfakes obscuring the line between reality and fabrication, how can we tell the difference? And how can media professionals or educators uphold integrity and trust in a landscape increasingly saturated with AI manipulation?

This panel brings together international experts in media literacy, journalism and conflict reporting to discuss the possibilities and perils at the heart of AI-driven communication. Covering topics such as deepfakes during climate disasters and voice cloning of politicians, this panel unpacks the complexities of truth and trust when media is so easy to fake.

This panel is presented with the support of the ARC Centre of Excellence for Automated Decision Making and Society (ADM+S), Swinburne University of Technology, and Western Sydney University’s ARC Linkage Project, Addressing Misinformation with Media Literacy.

Double pass

Moderator

Prof Anthony McCosker

Director of Swinburne University’s Social Innovation Research Institute and a chief investigator in the ARC Centre of Excellence for Automated Decision Making and Society. Alongside his internationally recognised research on digital inclusion and the adoption and impact of new technologies, Anthony currently leads a three-year project on Critical Capabilities for Inclusive AI. Recent co-authored books include Data for Social Good (2023), Everyday Data Cultures (2022) and Automating Vision (2020).

Panellists

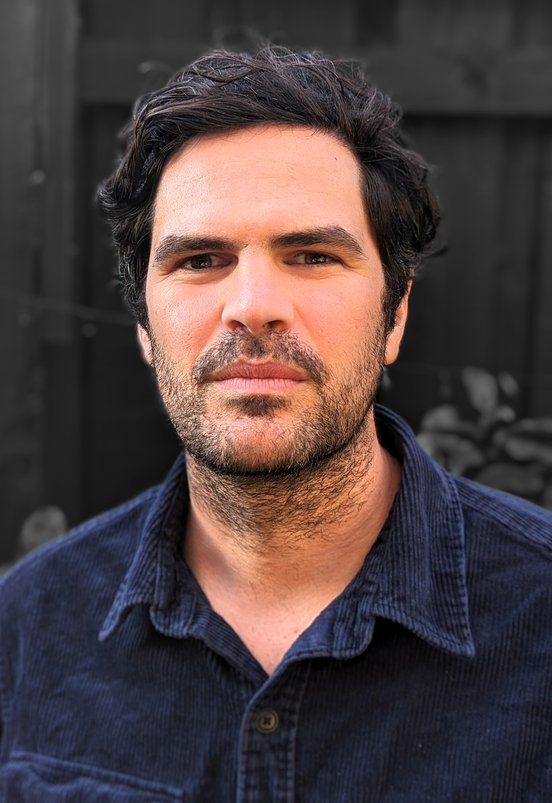

Mark Doman

Mark Doman is the visual journalism lead with the ABC’s Digital Story Innovations team, which produces interactive and data-driven stories. Mark and the team have won Walkley awards and international data journalism prizes for a range of stories including their work mapping forced detention camps in China, visualising the deliberate destruction of cultural heritage in Ukraine and investigating illegal logging in Australian forests.

Sam Gregory

An award-winning human rights advocate and technologist with 25+ years of experience addressing emerging technologies and civic engagement. As executive director of WITNESS, he leads efforts to harness technologies for human rights, including the Prepare, Don’t Panic initiative, which has shaped policy on deepfakes and generative AI. Gregory has testified before US Congress on AI regulation and initiated the global Deepfakes Rapid Response Force, connecting journalists with forensic specialists.

Stephanie Hankey

Stephanie Hankey is a strategist and social entrepreneur specialising in the social and environmental impact of technology. As co-founder of Tactical Tech, she advances digital literacy through global public education initiatives. She co-curated The Glass Room, an award-winning exhibition engaging over 500,000 people across 70+ countries including critical discussions on technology and AI. A Royal College of Art graduate, she is an Ashoka and Harvard Loeb Fellow and a dual professor at Potsdam FHP.

Not an ACMI Member yet?

Experience ACMI in the very best way. Get a range of discounts and invitations to exclusive previews.

ACMI Shop

Melbourne's favourite shop dedicated to all things moving image. Every purchase supports your museum of screen culture.